This year’s exam chaos highlights a big issue with predicting A-level results. The evidence suggests that teachers typically over-predict, and some school students’ grades are harder to predict than others. This raises the question of why we continue to base university applications on these predictions.

The cancellation of this year’s public examinations for school students due to the Covid-19 pandemic has led to young people being awarded ‘centre assessment grades’ – their teachers’ predictions of which grades they were most likely to achieve.

Predicted grades are a common feature of the UK’s education system, used each year to facilitate university applications. Yet research shows that these predictions are highly inaccurate, and the accuracy of predictions varies across students’ achievement levels, school type and subjects studied. This suggests that the fairest solution would be to move to university applications based on A-level results rather than predictions.

How are predicted grades used?

The UK has a unique system for applying to university. Unlike most other countries, where applications are based on standardised tests or grade point averages, in the UK, applications to university are based on predictions of the grades that students are likely to achieve in their A-levels. Teachers make these predictions up to six months before the students actually take their exams, basing their decisions on a range of factors including past performance, progress and, importantly, aspirations for what the students could achieve.

Students then use these grades to apply to up to five courses at university, and universities offer places to applicants that are typically conditional on the applicant achieving certain grades.

For example, if a student is predicted AAA, the university could make a conditional offer of AAB. If the student then achieves AAB or above, the university is legally obliged to give a place to that student. If the student achieves ABB, the university has discretion over whether to confirm the place or withdraw the offer. In the case of the latter, the student may then enter ‘clearing’ – a process where students who are not holding any offers from universities or colleges may search for a place on an unfilled course.

In short, teachers’ predictions are high stakes – they help students decide which courses to apply to, and they inform the offers that universities make to students.

How accurate are predicted grades?

Research on predicted grades is relatively scarce due to restrictions on access to data from the Universities and College Admissions Service (UCAS). Where studies have been able to access the data, the findings are broadly consistent, suggesting that this evidence is reliable.

First, studies show that it is difficult to predict grades accurately. Studies looking at individual grades show that only about half are correctly predicted (Delap, 1994; Everett and Papageorgiou, 2011). Studies looking at the accuracy of school students’ best three A-levels show that even fewer are correctly predicted based on these criteria (Gill and Benton, 2014; UCAS, 2016; Murphy and Wyness, 2020).

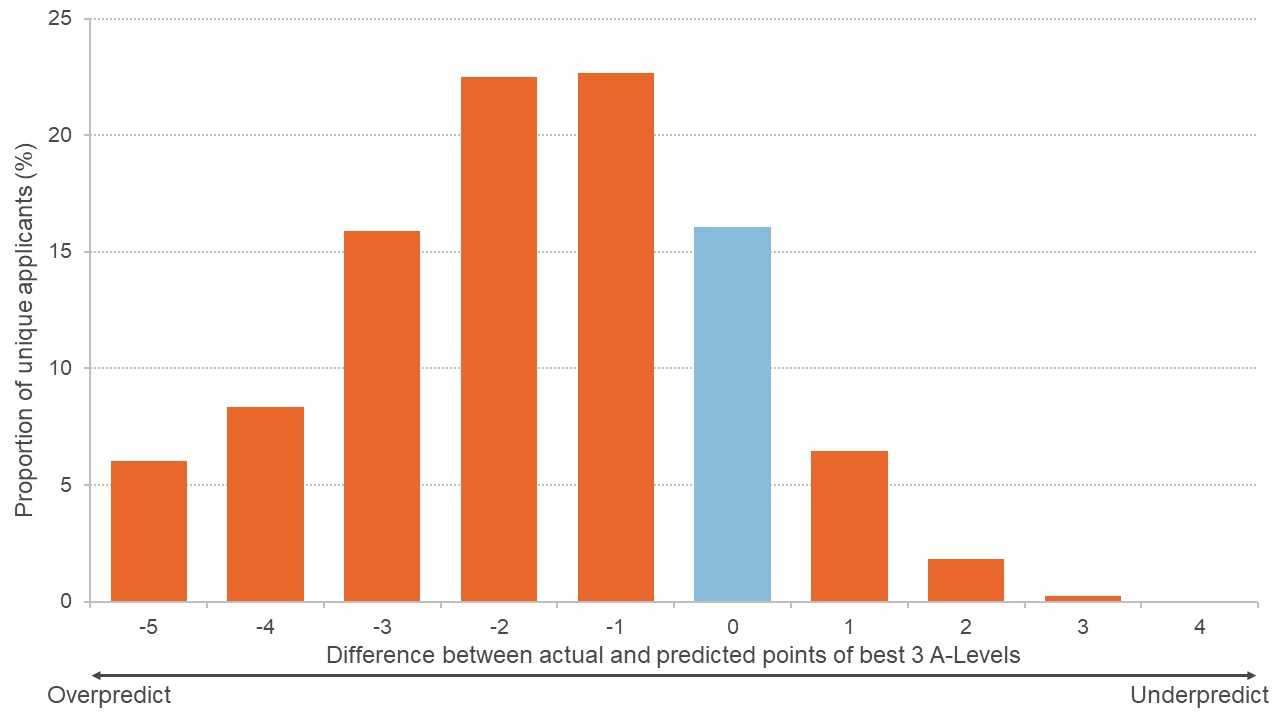

Second, across all studies, the vast majority of inaccurate predictions are from teachers over-predicting rather than under-predicting grades. Around half of individual grades and 75% of students (based on best three A-levels) are over-predicted by at least one grade (Delap, 1994; Everett and Papageorgiou,2011; Murphy and Wyness, 2020). As Figure 1 shows, while only 16% of students are correctly predicted based on their best three A-levels, the majority of students are over-predicted (75%) and only a small proportion are under-predicted (8%).

We do not know the reasons for this over-prediction, but it is perhaps not surprising that teachers tend towards being optimistic about the grades that their students might achieve. It is likely that teachers will want to give them the benefit of the doubt – particularly as these predictions are so critical when it comes to students’ chances of gaining a place at university.

Figure 1: Distribution of the difference between actual and predicted grades

Source: Murphy and Wyness, 2020

Notes: Each point on the x-axis represents the achieved point score of the applicant minus the predicted point score of the applicant. Points score is defined by UCAS as the points score attached to the highest 3 A Level grades achieved by the applicants (A*=6, A=5, B=4, C=3, D=2, E=1).

A third finding from research is that there are some groups of students that are harder to predict than others. For example, in all studies, high achievers are more accurately predicted than low achievers. This is perhaps not surprising – teachers tend towards over-prediction, but very high achievers cannot be over-predicted, so their predictions tend to be more accurate. Therefore, it is important to take account of students’ achievement when looking at prediction accuracy by characteristics.

What are the differences in accuracy by student and school type?

There is some evidence that prediction accuracy varies by the background of the student or the school they attend.

After taking account of students’ achievement, one study finds that high achievers from lower socio-economic status (SES) families or from comprehensive schools receive less generous predictions than their high-achieving, high socio-economic status, or grammar and private school counterparts (Murphy and Wyness, 2020). One potential reason for this might be that it is simply harder to predict the A-level grades of these students based on their past performance.

Examining whether this is the case, in one study, the researchers attempt to predict students’ A-level grades, using detailed data on their GCSE performance in different subjects (Anders et al, 2020). This study finds that high-achieving students in comprehensive schools are more likely to be under-predicted by the models compared with their grammar and private school counterparts, who were more accurately predicted – lending weight to the hypothesis that certain student types are harder to predict than others. This study also highlights the difficult task that teachers face each year, particularly for young people with more variable trajectories from GCSE to A-level.

What are the implications?

There is no direct evidence on the implications of this inaccuracy in predicted grades in terms of university enrolment and outcomes, due to the lack of available data that link predicted grades, university applications and university enrolment.

One study (Murphy and Wyness, 2020) provides suggestive evidence that students who are under-predicted are more likely to attend an institution where they are over-qualified relative to other students. But there is a lack of reliable evidence on the effect of prediction accuracy on university choice and university outcomes.

Could predicted grades be abolished?

The UK’s use of predicted grades has been debated in the past, and many groups – such as the Labour Party and the Sutton Trust – have called for a move to ‘post-qualification applications’ (PQA).

In practical terms, such a move remains controversial. To allow students to apply to university on the basis of their actual A-level grades, it is likely that we would need to move A-level exams forward – shortening the school year – and the beginning of the university term back. But a small number of studies have illustrated how this could work in practice, showing that a system of PQA is possible (UCU, 2019; EDSK, 2020).

Where can I find out more?

Minority report: the impact of predicted grades on university admissions of disadvantaged groups: Richard Murphy and Gill Wyness examine A-level prediction accuracy by student and school type.

Grade expectations: how well can we predict future grades based on past performance?: Jake Anders, Catherine Dilnot, Lindsey Macmillan and Gill Wyness show that predicting grades using statistical and machine learning methods based on young people’s prior achievement can only modestly improve the accuracy of predictions compared with teachers.

Post-qualification application: a student-centred model for higher education admissions in England, Northern Ireland and Wales: Graeme Atherton (NEON) and Angela Nartey (UCU) present a model for post-qualification applications.

Who are UK experts on this question?

- Gill Wyness, Centre for Education Policy and Equalising Opportunities, UCL, London

- Lindsey Macmillan, Centre for Education Policy and Equalising Opportunities, UCL, London

- Richard Murphy, Centre for Economic Performance, LSE

- Graeme Atherton (NEON)

- Angela Nartey (UCU)